Circuit Breaker Patterns for Multi-Tenant Carrier Integration: Isolating Failures Without Cascading Outages

When FedEx's API went down at 2 AM and took out shipping for 40% of your tenants, you learned something important about circuit breakers. Traditional circuit breaker patterns don't handle multi-tenant carrier integration gracefully—when one carrier fails, the blast radius shouldn't encompass every tenant on your platform.

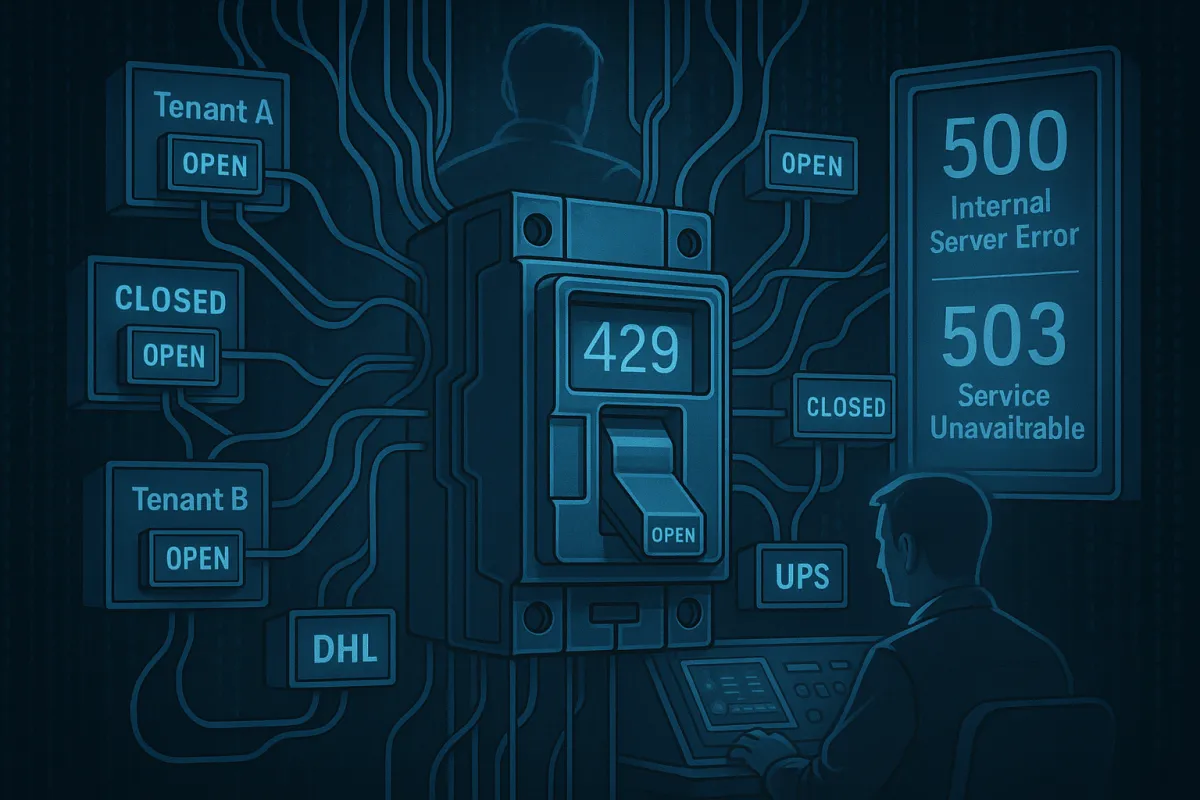

Most circuit breaker implementations treat failure as binary: open or closed, healthy or broken. But in multi-tenant carrier integration software, you need surgical precision—a UPS rate limit affecting Tenant A's high-volume Black Friday traffic shouldn't trigger circuit breakers for Tenant B's routine DHL shipments.

The Multi-Tenant Carrier Challenge

Carrier APIs sit at the low-maintenance, lower-speed end of the performance scale, with occasional "unicorn" API engines that break this pattern. But the real complexity emerges when you're serving hundreds of tenants across dozens of carriers simultaneously.

Consider this scenario: When DHL temporarily suspended B2C shipments to the U.S. valued over $800, businesses dependent on a single global carrier discovered how vulnerable single-carrier strategies can be. Now multiply that across a multi-tenant platform where Tenant A ships electronics via UPS, Tenant B uses DHL for pharmaceuticals, and Tenant C relies on FedEx for automotive parts.

Standard circuit breaker implementations create two problems here. First, they operate at service boundaries—if the "UPS service" fails, all UPS traffic stops. Second, they don't differentiate between failure types. A 500 Internal Server Error means something went wrong server-side, while a 503 Service Unavailable indicates the external service is overloaded or down for maintenance. A 429 rate limit for one tenant's bulk operations shouldn't trigger the same response as a genuine service outage.

Leading platforms handle this differently. Cargoson implements tenant-aware circuit breakers alongside competitors like nShift, EasyPost, and ShipEngine, but the architectural approaches vary significantly. Some pool all tenant traffic through shared circuit breakers, others create complete isolation.

Circuit Breaker Fundamentals for Carrier APIs

A service client should invoke a remote service via a proxy that functions like an electrical circuit breaker. When consecutive failures cross a threshold, the circuit breaker trips, and for a timeout period all attempts fail immediately. After timeout expires, the circuit breaker allows limited test requests to pass through.

For carrier APIs, you need different thresholds for different failure scenarios:

Rate Limiting (429) Failures: These require immediate circuit opening with shorter recovery windows. If UPS returns 429 responses, you're hitting quota limits and should back off for 60-300 seconds depending on the carrier's reset window.

Service Unavailable (503) Failures: This indicates the external service is overloaded or down for maintenance. Circuit should open after 3-5 consecutive failures with 5-15 minute recovery windows for label generation, 30 seconds for tracking calls.

Timeout Failures: Network timeouts need aggressive circuit breaking. Label generation typically has 10-30 second SLAs with customers, so 3 timeouts over 60 seconds should trigger a 2-minute circuit opening.

Based on production data from enterprise shipping platforms, typical thresholds look like:

- Label generation: 5% failure rate over 1 minute triggers open circuit, 5-minute recovery window

- Rate shopping: 10% failure rate over 30 seconds, 1-minute recovery

- Tracking calls: 15% failure rate over 2 minutes, 30-second recovery

Tenant-Aware Circuit Breaker Architecture

The key insight: circuit breaker state should be scoped by tenant-carrier combination, not globally. In shared database, shared schema approaches, all tenants share the same tables but each tenant's data is tagged with a unique identifier, with queries designed to always filter by tenant ID.

Apply this pattern to circuit breakers using Redis key patterns:

# Circuit breaker state keys

cb:tenant123:ups:shipping:state = "closed"

cb:tenant123:ups:shipping:failures = 2

cb:tenant123:ups:shipping:last_failure = 1735689600

cb:tenant456:dhl:tracking:state = "half-open"

# Global carrier health (for fallback decisions)

carrier:ups:global_health = "degraded"

carrier:dhl:global_health = "healthy"

This structure enables surgical failure isolation. When Tenant 123's UPS circuit opens due to rate limiting, Tenant 456's DHL operations continue normally. Circuit breakers prevent cascading failures in microservices by stopping repeated requests to an unresponsive service and allowing it time to recover.

Thread pool isolation becomes critical here. When a request is received, the server allocates one thread to call the service. If this service is delayed due to failure and multiple requests come in, more threads will be allocated and all will have to wait. Separate thread pools per tenant-carrier combination prevent one tenant's carrier issues from exhausting threads for other tenants.

# Example architecture in pseudo-code

class TenantCarrierCircuitBreaker:

def __init__(self, tenant_id, carrier, operation):

self.key = f"cb:{tenant_id}:{carrier}:{operation}"

self.thread_pool = get_tenant_carrier_pool(tenant_id, carrier)

def call(self, func, *args, **kwargs):

with self.thread_pool:

return self._execute_with_circuit_breaker(func, *args, **kwargs)

Carrier-Specific Failure Classification

Circuit breakers detect failures and prevent them from constantly recurring during maintenance or temporary issues. They act like a switch that opens to stop the flow of requests to a failing service, allowing it time to recover.

Not all carrier failures deserve the same response. Here's how to classify them:

UPS API Patterns:

- 429 Rate Limit: Open circuit immediately, 5-minute recovery

- 401 Authentication: Don't circuit break, fix credentials

- 503 Service Unavailable: Circuit break after 3 failures, 10-minute recovery

- Timeout > 30s: Circuit break after 2 failures, 2-minute recovery

FedEx API Patterns:

- Rate limits tend to be more forgiving but when hit, require longer backoff periods

- Their maintenance windows are predictable (Sunday 3-6 AM EST)

- SSL certificate issues occasionally surface—these shouldn't trigger circuit breaks

Production monitoring shows UPS APIs fail differently than DHL or FedEx. A 502 Bad Gateway means your API, acting as a gateway to the external service, received an invalid response from the upstream server, typically indicating communication problems. For UPS, this often means their load balancer is routing to unhealthy instances. For DHL, it usually indicates temporary network issues.

Graceful Degradation Strategies

When circuit breakers open, your platform needs intelligent fallback routing. Systems can automatically assign the best carrier based on destination country, cost, reliability, speed, and customs history, with merchants no longer needing to guess which carrier fits best for a shipment.

Consider a three-tier fallback strategy:

Tier 1 - Primary Carrier: Normal operations with full feature set

Tier 2 - Secondary Carrier: Automatic failover when primary circuit opens

Tier 3 - Degraded Mode: Limited functionality, possibly manual intervention required

Implementation requires carrier capability mapping:

tenant_carrier_capabilities = {

"tenant123": {

"primary": {"carrier": "ups", "features": ["ground", "air", "international"]},

"secondary": {"carrier": "fedex", "features": ["ground", "air"]},

"fallback": {"carrier": "dhl", "features": ["ground"]}

}

}

The Publish-Subscribe architectural pattern is highly recommended for building scalable webhook systems, where your application serves as the publisher, sending events to a central message broker that delivers to subscribed consumers. Use this pattern for webhook delivery when circuits open—notify customers of carrier switches and delivery impacts.

SLA preservation becomes critical. If your platform promises 99.9% uptime for label generation, circuit breakers should preserve this by failing over to secondary carriers rather than returning errors to customers.

Observability and Tenant-Level Metrics

Circuit breaker observability requires tenant-scoped metrics. Measure delivery latency from when a webhook is triggered to when it's received, with lower latency ensuring real-time updates. You should slice this data based on tenant ID, destination URL, etc.

Key metrics to track per tenant-carrier combination:

- Circuit State Distribution: Percentage of time spent in closed/open/half-open states

- Failure Rate by Error Type: Separate 429s, 503s, timeouts, and connection errors

- Recovery Time: How quickly circuits close after opening

- Tenant Impact: Which tenants are most affected by which carrier issues

Grafana dashboard queries might look like:

# Circuit breaker trip rate by tenant-carrier

rate(circuit_breaker_trips_total{tenant="$tenant", carrier="$carrier"}[5m])

# Failure distribution

sum by (error_type) (circuit_breaker_failures_total{tenant="$tenant"})

# Recovery time percentiles

histogram_quantile(0.95, circuit_breaker_recovery_duration_seconds_bucket)

Monitor retry rates—high retry rates may indicate issues with webhook delivery or recipient availability. An ideal retry rate should be less than 5%. This applies equally to circuit breaker retry attempts.

Production Patterns and Edge Cases

Real-world complications make textbook circuit breaker implementations insufficient. Consider these scenarios:

Partial API Failures: UPS tracking works but shipping label generation fails. Standard circuit breakers would trip globally, but you need operation-specific circuits.

Carrier Maintenance Windows: DHL's Trade Atlas 2025 predicts global trade volumes will grow at 3.1% annually from 2024 to 2029, requiring flexibility around trade regulation changes. Carriers announce maintenance windows, and your circuit breakers should respect these instead of treating them as failures.

Bulk Operations: When a tenant uploads 10,000 shipment requests, carrier APIs may throttle aggressively. Circuit breakers need bulk operation awareness to avoid triggering on expected rate limiting.

Here's a production example from an anonymized outage report:

"At 14:32 UTC, UPS began returning 503 responses for label generation while rate shopping remained healthy. Standard circuit breakers would have failed over all UPS traffic, but our operation-scoped breakers allowed rate shopping to continue while label generation failed over to FedEx. Recovery began at 14:47 UTC when UPS label generation resumed. Total impact: 15 minutes of elevated FedEx usage costs, no customer-facing downtime."

By adopting the Circuit Breaker pattern, software systems become more resilient, responsive, and fault-tolerant—key attributes for any modern, scalable application. This approach prevents cascading failures by stopping repeated requests to unresponsive services and allowing time to recover.

The key is balancing tenant isolation with operational efficiency. Too much isolation creates management overhead; too little risks cascading failures. Production deployments typically settle on tenant-carrier-operation scoping with global carrier health as a secondary signal.

Your next steps: audit your current circuit breaker implementation for tenant awareness, implement carrier-specific failure classification, and add tenant-scoped observability. The investment pays dividends when the next carrier outage hits at peak shipping season.